Annotations

Annotations started out as a simple feature in Java for signalling to the tools that read Java code the values of common information, like @author of a piece of code. You know, things that you needed to annotate within your code. Think: NOTE. You would mark your annotation with an @ followed by an annotation type.

It was also used by programmers to notate meta-information about their code which would be passed to documentation-generators, so coders could document their code inside of the code itself, instead of writing their documentation in a separate system like Notepad, or HTML. For instance, you could add notes about a function you created and have the documentation tool (Javadoc) automatically generate an entry for your function in a list of all the functions you made, with its short informal description, its function details, like the parameters it defines for the data that it accepts, as well as the type of data the function returns. In other words, annotations were made to annotate things (duh), including types of data, and there could be different types of annotations.

Maintaining documentation is a hassle, so this was a welcome addition to a language.

For example, if we wrote a function calculating Pi…

void calculatePi(int depthOfApproximation) {

doStuffHere

}it would have the following written right above it!

@param depthOfApproximation an integer determining how accurate our Pi approximation is. Higher numbers result in more iterations of the algorithm, thus improving the accuracy of our Pi approximation.In general, annotations follow these formats:

@<annotationType> <variableName> <description>for parameter type annotations. Or….

@<annotationType> <description>for returned data annotations.

It was simple, and effective, and nice. But that’s not how it stayed. The one thing you can count on from programmers is that they try to use and abuse a tool as much as possible before realizing they shouldn’t have done that. There is a difference between having a simple toolset that works together (like an instruction set that is composable), and turning your nice pocket knife into a painful to carry Swiss Army Knife.

The annotation feature was either modified to allow, or was originally built in a way that allowed, the annotation itself to be replaced with other text! This was powerful, for one reason: compiler directives. You could now inject code into your code. “Yo dawg, I heard you like some code with your code, so I made this compiler directive to inject some code into your code injected into your other code, dawg.” It was so similar to the compiler directive feature in C and C++ that Java developers believed it to be a tool for metaprogramming. They could make boilerplate go away, and give the language new features by simply allowing the programmer to use an annotation to change the behavior of the language. Now, with the power of terrible language design, the annotation could mean literally anything.

Sounds powerful….and confusing as hell. And it is. Here’s a page that lists the annotations. If you briefly read through it, you’ll see there is no limit to the type of bullshit that can go on in the language because of annotations. The bullshit was given a name: Spring.

Spring Core Annotations | Baeldung

And, much like a stiff overweight Spring compressed to its maximum potential energy, if you try to unravel it, it jumps right at you and smacks you in the face with all its might. Point that thing away from your eyes.

Because of annotations, now you have NO sense of what is going on in your code. You are forced to stop trying to understand your code and the language and go read someone else’s idea of how the annotation system should be used. A big black box of bullshit.

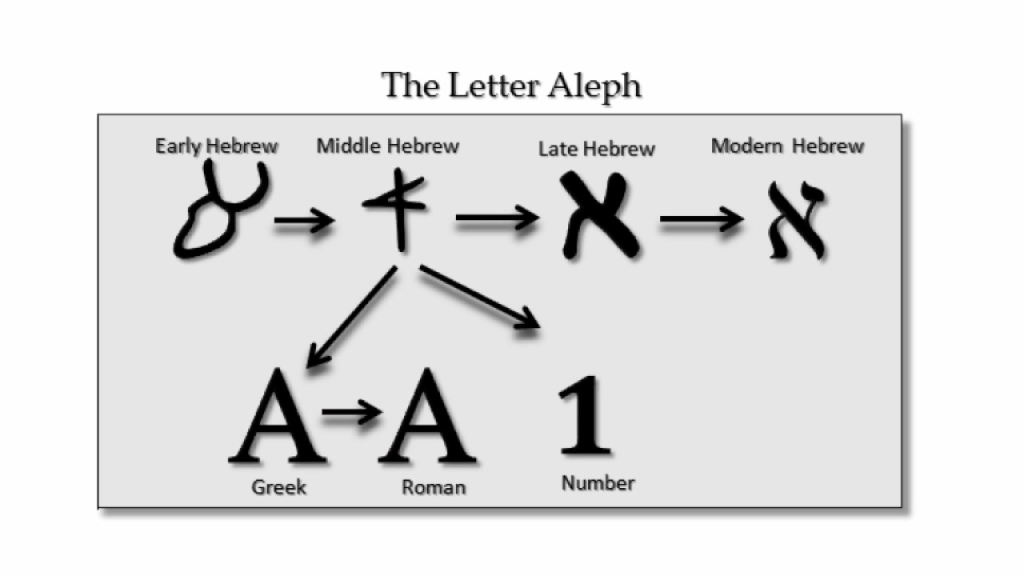

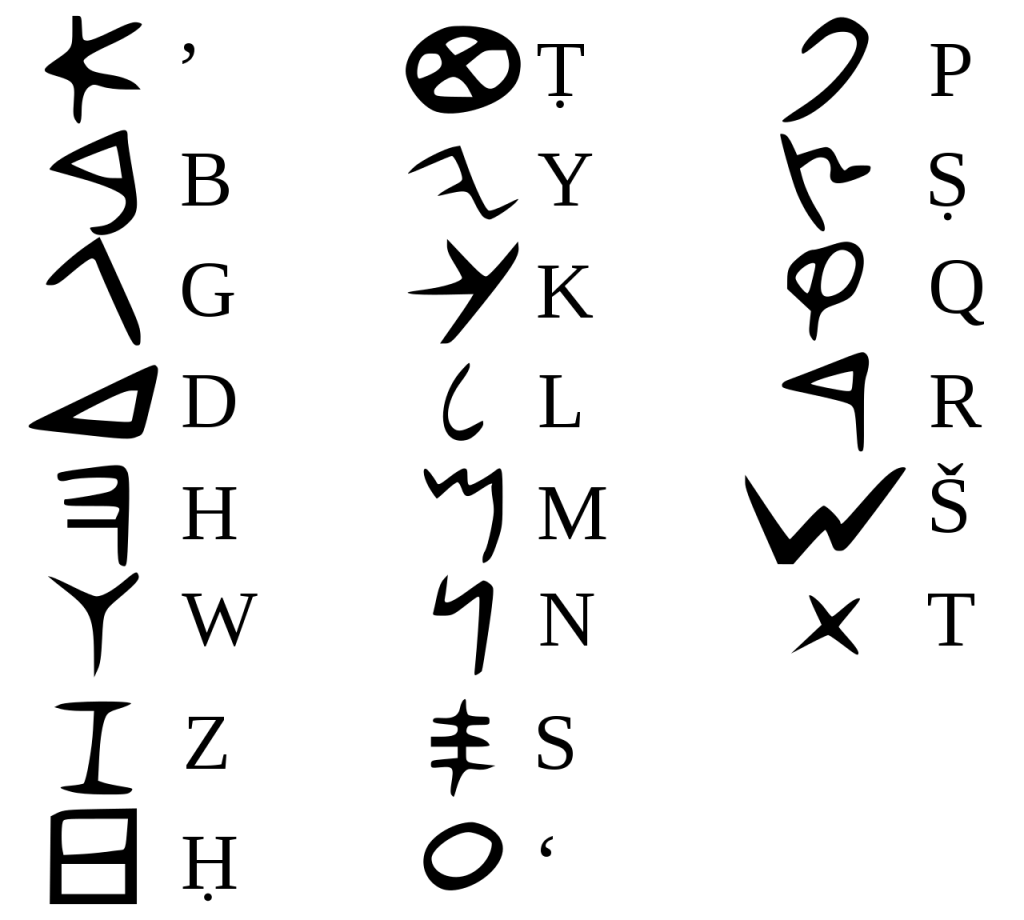

You might say, “oh this isn’t a big problem…we live in a world with ever-changing language.” This is not an equivalent example, however. While the English language evolves over time, and has dialects (ebonics anyone?), not everyone speaks every dialect, and the general language itself mutates very slowly. Technically, it follows a morphological evolutionary process, which, informally speaking, means the definitions retain similar “shape” or meaning but warp over time in somewhat predictable ways. This is the basis of etymology and the changes in the alphabet from ancient Greek and ancient Phoenician to the modern English alphabet.

Granted, they are sometimes very crappy renditions of the modern day equivalent:

At least… unless we’re talking about Gen Z’s use of made-up stupid words like “Cheugy” and redefining existing words to be completely unrelated to their real definition, like “Bet”. Anyone with a brain should be of the opinion that Gen Z needs to read a dictionary. Words have meanings. And you don’t get to just overload their definition ad infinitum with nonsensical meanings, which other expressions already describe quite well. It’s like Gen Z was totally unaware of all the words already out there, or had a problem with words longer than 2 syllables, so they decided to reuse the short ones. But hey, if you agree with me…don’t say “Bet”—please.

But, back to the point, the abuse of Annotations are a huge part of why Java is considered “bloated” according to my brother, and an unlearnable pile of shit, according to me. Languages are meant to be acquired, and to be acquired, they need to not require so much memorization of very specific things. The human brain NEEDS to be able to generalize behavior of a system to understand it, but with such a large potential for changing how a language works, we may as well not call a Spring-powered application a Java project any more.

If there’s anything we learned from regular expressions, it’s that a challenging language within a challenging language is a terrible idea. (And let’s face it, iterative programming languages are inherently challenging for humans and their intuition).

And…I’m not the only one who noticed this horror in Java. I’ve learned to trust my intuition about programming language design, because every time I remember something I hated when I first encountered it in my college degree, including object-oriented programming, I found out later on that my instincts were correct. It may have taken me 10 years to realize those instincts were correct, but after reading about Alonzo Church and the lambda calculus, and its resulting language, Lisp (LISP), I now understand how my early experiences in programming shaped my intuitive understanding of computation and its complexity.

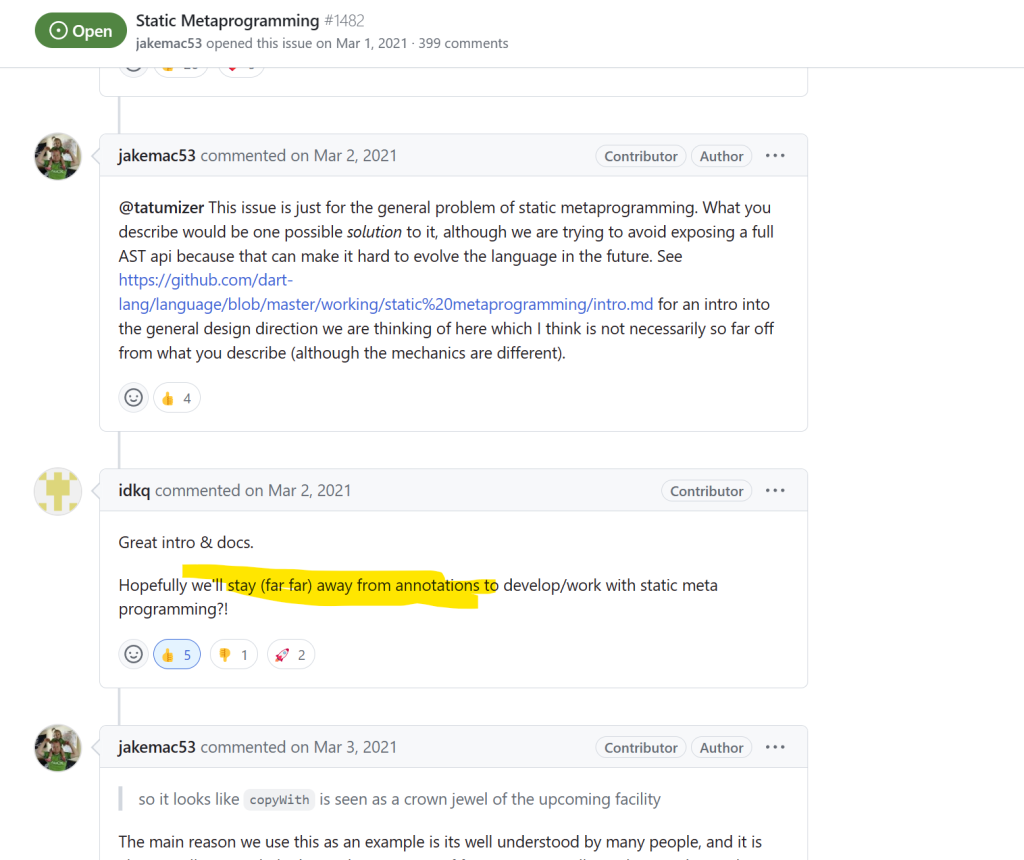

Below, you can see part of a discussion about the Dart language design. Dart is a language developed by Google, mainly for use with the Flutter Software Development Kit, which provides a set of tools called a “framework” to help unify and simplify developing cross-platform apps.

Flutter is a powerful framework that compiles Dart code down to native machine code for Android, iOS, Windows, Web (JavaScript, not machine code), and even Linux. If there ever was a single language to invest it, this would be it, for the current state of application development. It is also developed by Google, so it has good hosts/stewards of design and cleanliness. While the language itself borrows much inspiration from JavaScript and Kotlin (which in turn borrows inspiration from Java), it is still not quite at the level of Lisp or Haskell or Erlang or Elixir in terms of language design for modern computational problems. However, as noted on Wikipedia, it is one of the languages that implements the Actor model. I am newish to Dart, so I may have something to learn before I can confidently criticize its origins as a Java derivative.

Leave a Reply

You must be logged in to post a comment.